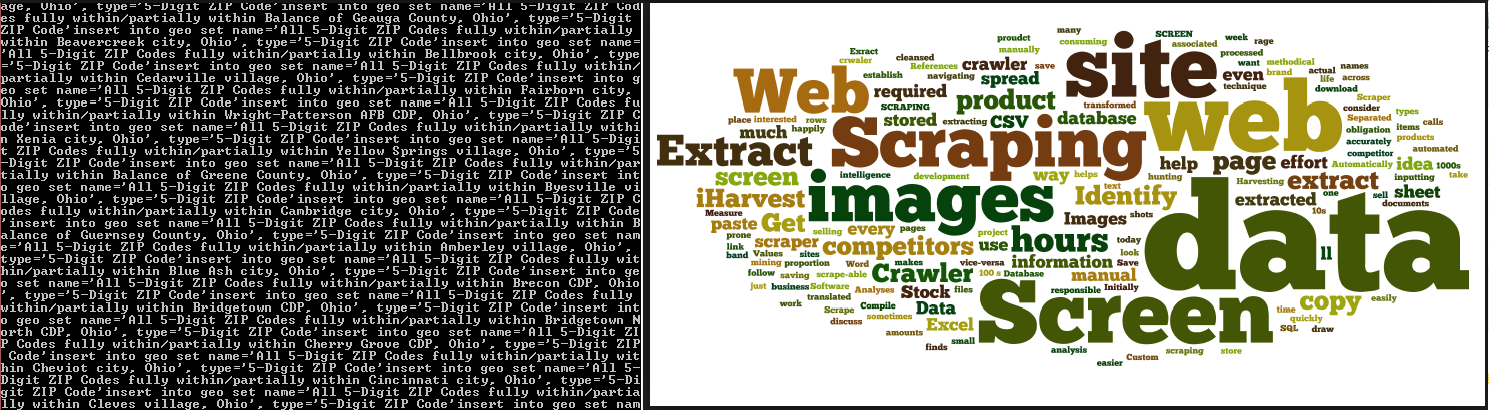

In today’s business world probably most important thing is DATA. Web Scrapers or Screen Scrapers are becoming important tool or technologies for grabbing large amount of data from a website.

How do you get information from a website to an Excel spreadsheet? The answer is screenscraping.

What is a screenscraper?

Screen scraper or web scraper is a small robot that reads websites and extracts pieces of information. When you are able to unleash a scraper on hundreds, thousands, millions or even more pages it can be an incredibly powerful tool.

There are some expert web scrappers around, you may contact them for your customized need.

Web scraping or screen scraping allows you to extract information from websites automatically and it is done through a specialized program and analysed later either through software, web application or manually.

On the other hand if you have a web site which serves a large amount of data and that can be important for others, you may want to stop bots from screen scrapping your site. As screen scrapping may eat a large amount of bandwidth or you do not want to give all of your data to someone else. The following are the steps you can take to prevent this.

• Blocking an IP address either manually or based on criteria such as Geolocation and DNSRBL. This will also block all browsing from that address.

• Disabling any web service API that the website’s system might expose.

• Bots sometimes declare who they are (using user agent strings) and can be blocked on that basis (using robots.txt); ‘googlebot’ is an example. Other bots make no distinction between themselves and a human using a browser.

• Bots can be blocked by excess traffic monitoring.

• Bots can sometimes be blocked with tools to verify that it is a real person accessing the site, like a CAPTCHA. Bots are sometimes coded to explicitly break specific CAPTCHA patterns or may employ third-party services that utilize human labor to read and respond in real-time to CAPTCHA challenges.

• Commercial anti-bot services: Companies offer anti-bot and anti-scraping services for websites. A few web application firewalls have limited bot detection capabilities as well.

• Locating bots with a honeypot or other method to identify the IP addresses of automated crawlers.

• Obfuscation using CSS sprites to display such data as phone numbers or email addresses, at the cost of accessibility to screen reader users.

• Because bots rely on consistency in the front-end code of a target website, adding small variations to the HTML/CSS surrounding important data and navigation elements would require more human involvement in the initial set up of a bot and if done effectively may render the target website too difficult to scrape due to the diminished ability to automate the scraping process.

You might take help from experts, in case you feel screen scraping at your site needs to be encountered professionally.